Nowadays, developing modern software often involves juggling numerous containerized applications. Each container plays a specific role, working together to deliver the final product. But managing these containers—deploying them, scaling them up or down, and ensuring they run smoothly—can quickly become a complex task. There will be times when you need to manage and orchestrate dozens, or even hundreds, of containers working together. So what’s the solution here? That’s where Kubernetes comes in.

So, what is Kubernetes? Kubernetes, often shortened to K8, is an open-source system designed to automate the deployment, scaling, and management of containerized applications. That said, this blog post will explore more about Kubernetes, its core functionalities, benefits, and how it can revolutionize your approach to containerized application management.

What is Kubernetes?

Kubernetes is an open-source platform specifically designed to automate the deployment, scaling, and management of containerized applications. Think of it as a central command center for your containers.

For instance, think of your computer applications as a team working together to achieve a goal. Traditionally, each application might run on its own separate server, kind of like giving each team member their own office. This can be inefficient, as some applications might need more resources than others.

Containerization offers a more modern approach. It packages applications with everything they need to run – code, libraries, and settings – into self-contained units called containers. These containers are lightweight and portable, allowing them to share a single server efficiently, like a team working in an open-plan office.

But managing a large group of containers spread across multiple servers can get messy. This is where Kubernetes comes in. It acts like a conductor for your container crew. Kubernetes automates tasks like:

- Deployment: Placing your containers on the right servers and starting them up.

- Scaling: Adding or removing containers based on demand, ensuring your application has the resources it needs.

- Networking: Connecting your containers to each other and the outside world, allowing them to communicate seamlessly.

- Load Balancing: Distributing incoming traffic evenly across your containerized application, preventing any one container from getting overloaded.

What is the Kubernetes Cluster?

At the core of Kubernetes lies the cluster, a group of machines working together to run your containerized applications. Think of it as a symphony orchestra – each machine (node) acts like an instrument, and Kubernetes is the conductor, ensuring everyone plays their part in harmony.

These machines fall into two main categories:

- Master Nodes: The brains of the operation, responsible for making high-level decisions about the cluster’s state. They manage tasks like scheduling container deployments and ensuring overall health.

- Worker Nodes: The workhorses that actually run your containerized applications. These nodes host the individual containers, providing the resources they need to function.

When working together, the master and worker nodes create a powerful platform for deploying and scaling containerized applications. In the next section, we’ll explore the components that make up a Kubernetes cluster in more detail, providing a deeper understanding of this essential architecture. For a more technical dive into Kubernetes clusters, check out our dedicated blog post on Kubernetes cluster.

What is Kubernetes Pod?

In Kubernetes, a pod is the smallest deployable unit that encapsulates one or more containers, along with shared resources such as storage volumes and networking configurations. Think of it as a logical hosting environment for your containers, where they can run together and communicate with each other seamlessly.

Pods are ephemeral and can be created, deleted, or replicated dynamically to meet the needs of your application. They provide a way to organize and manage related containers as a single unit, facilitating efficient resource utilization and simplifying deployment and scaling processes.

How Kubernetes Works?

Kubernetes functions like a well-oiled machine, with various components working together to manage your containerized applications. It follows a master-slave model, where a central control plane dictates tasks to a network of worker nodes.

The control plane, the brain of the operation, houses several key components:

- API Server: The command center, receiving instructions from users and coordinating with other components.

- Scheduler: The matchmaker, figuring out where to place containers based on resource needs.

- Controller Manager: The overseer, constantly monitoring the cluster’s state and ensuring it aligns with your desired configuration.

- Etcd: Stores vital cluster data like configuration and state information.

Meanwhile, each node in the cluster runs a suite of essential components:

- Kubelet: This agent manages the containers running on its machine, working in conjunction with a container runtime like Docker. It receives instructions from the control plane, pulls container images, and ensures the containers are running according to specifications.

- Kube-proxy: This network proxy plays a crucial role in enabling communication between your containerized applications. It monitors Services and Endpoints objects defined in Kubernetes, which specify how network traffic should be routed to your pods. Kube-proxy translates these rules into firewall or iptables rules on the underlying network layer. By doing so, it ensures that incoming traffic reaches the appropriate pods within the cluster, facilitating communication between your containerized services.

Here’s how it all comes together:

- Deployment Request: You submit a request to deploy an application. Kubernetes translates your deployment specifications into instructions.

- Scheduling Magic: The scheduler analyzes these instructions and assigns them to appropriate nodes based on available resources and any defined placement rules.

- Firing Up the Crew: Kubelets on each assigned node receive instructions, pull container images, and start the containers. And, Kube-proxy on each node watches for updates to Services and Endpoints, and configures the network rules accordingly.

- Constant Vigilance: Kubernetes continuously monitors the health and performance of everything, automatically scaling resources up or down to maintain your desired application state.

- Networking: Communication channels are established, allowing containers to talk to each other and external services seamlessly.

In essence, Kubernetes orchestrates the entire container lifecycle – deployment, scaling, and management – through a centralized control plane and distributed execution on the worker nodes. This frees developers from managing infrastructure complexities, allowing them to focus on building robust and scalable applications.

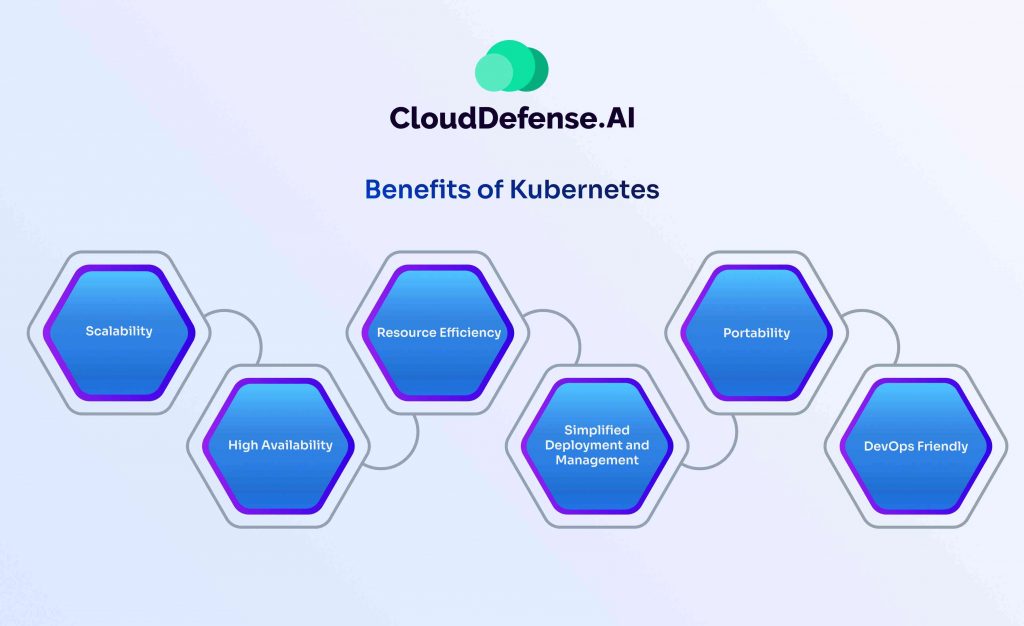

Benefits of Kubernetes

Having explored the inner workings of Kubernetes, let’s delve into the advantages it offers for developing and deploying modern applications. Here are some key benefits that make Kubernetes a game-changer:

Scalability: Kubernetes empowers you to effortlessly scale your applications up or down based on demand. Need to handle a surge in traffic? Kubernetes can automatically spin up additional container instances to meet the increased load. Conversely, during periods of low activity, resources can be scaled down, optimizing resource utilization and reducing costs.

High Availability: Imagine your application experiencing downtime due to a container failure. With Kubernetes, you don’t have to sweat! It employs self-healing mechanisms. If a container malfunctions, Kubernetes automatically detects the issue, restarts the container, or reschedules it on a healthy node. This ensures your application remains highly available and resilient to failures.

Resource Efficiency: Traditional application deployments can leave behind stranded resources. Kubernetes tackles this by intelligently packing containers onto nodes, maximizing resource utilization. Additionally, features like horizontal pod autoscaling ensure you only allocate the resources your application truly needs, leading to cost savings.

Simplified Deployment and Management: Gone are the days of manually managing deployments across multiple servers. Kubernetes streamlines the deployment process, allowing you to define your desired application state and leave the orchestration to Kubernetes. It handles tasks like container rollout, rollbacks, and health checks, freeing you to focus on application development.

Portability: Kubernetes applications are containerized, making them inherently portable. They can be deployed across different environments, whether on-premises, private cloud, or public cloud platforms, without requiring code modifications. This flexibility empowers you to choose the infrastructure that best suits your needs.

DevOps Friendly: Kubernetes aligns perfectly with DevOps principles. It promotes infrastructure as code, allowing you to define your cluster configuration and application deployments using declarative manifests. This facilitates automation, collaboration, and continuous delivery workflows within your development teams.

What Can Kubernetes Do?

At its core, Kubernetes simplifies the complexities of building and running modern applications. It offers a treasure trove of features that streamline the process. Imagine having standardized services like local DNS and load balancing readily available for your applications, all easily managed by Kubernetes. Additionally, it automates common behaviors like restarting crashed containers, ensuring your applications stay up and running smoothly.

Kubernetes provides a defined set of building blocks like “pods,” “replica sets,” and “deployments” that group containers together. This modular approach makes it easy to design and configure complex applications with multiple interacting components. Developers can leverage a standard API to tap into even more sophisticated functionalities, allowing them to create applications that manage other applications – like a self-sustaining ecosystem.

In essence, Kubernetes frees developers from the burden of infrastructure management. By automating deployments, scaling resources, and handling application health, it allows them to focus on what truly matters – building innovative features for their applications.

But the benefits extend beyond development efficiency. Kubernetes safeguards application uptime by automatically recovering from issues like container crashes or server failures. This translates to a more reliable and resilient user experience, minimizing business disruptions and ensuring adherence to service level agreements. So, the next time you encounter a complex application, remember the invisible hand of Kubernetes working tirelessly behind the scenes, keeping it running smoothly and efficiently.

Conclusion

As we’ve explored, Kubernetes empowers developers to build and deploy modern applications with unprecedented ease and efficiency. From effortless scaling to self-healing mechanisms, it offers a comprehensive suite of features that streamline container orchestration. Yet, with great power comes great responsibility. As containerized applications become more prevalent, so does the security landscape. Securing your Kubernetes clusters is paramount, and that’s where CloudDefense.AI can help.

Our KSPM solution offers a comprehensive suite of tools to secure your Kubernetes environment. It proactively identifies and addresses security vulnerabilities, enforces best practices, and continuously monitors your cluster’s health. By integrating KSPM into your development workflow, you can build confidence in the security posture of your containerized applications. Book a free demo of our KSPM solution today and experience the power of secure and scalable container deployments.

Our team of experts is eager to guide you through the KSPM platform and showcase how it can revolutionize your container security posture. Don’t wait – take the first step towards securing your applications and unleashing the full potential of Kubernetes!