Building applications today often comes down to a choice: serverless or containers. Each offers distinct advantages, but the right pick depends on your goals. Serverless is great for hands-off scalability, while containers give you more control over the environment.

The decision isn’t just technical—it impacts costs, performance, and development speed. Here let’s break it down and help you figure out what’s best for your next project.

What is Serverless Computing?

In the past, deploying applications meant managing large servers ourselves. This approach, while effective at the time, brought along several challenges:

- Unnecessary Costs: Keeping servers running—even when idle—meant paying for unused resources.

- Maintenance Burden: Ensuring uptime, performing regular maintenance, and applying security updates were entirely our responsibility.

- Scaling Challenges: As usage fluctuated, scaling servers up or down required constant monitoring and adjustments, which added complexity and cost.

For smaller teams and startups, these issues posed significant obstacles, increasing time-to-market and overall delivery costs—both critical factors in modern software development.

This is where serverless computing steps in. Instead of managing servers, your cloud provider (like AWS, Azure, or Google Cloud) dynamically allocates resources to execute your application’s code. You’re only charged for what you use, drastically reducing compute costs compared to traditional servers.

Although the term “serverless” suggests no servers are involved, it’s not entirely true. The infrastructure exists but is abstracted away, letting you focus on your code rather than server management. This shift simplifies development, reduces costs, and accelerates deployment timelines, making it an ideal choice for many modern applications.

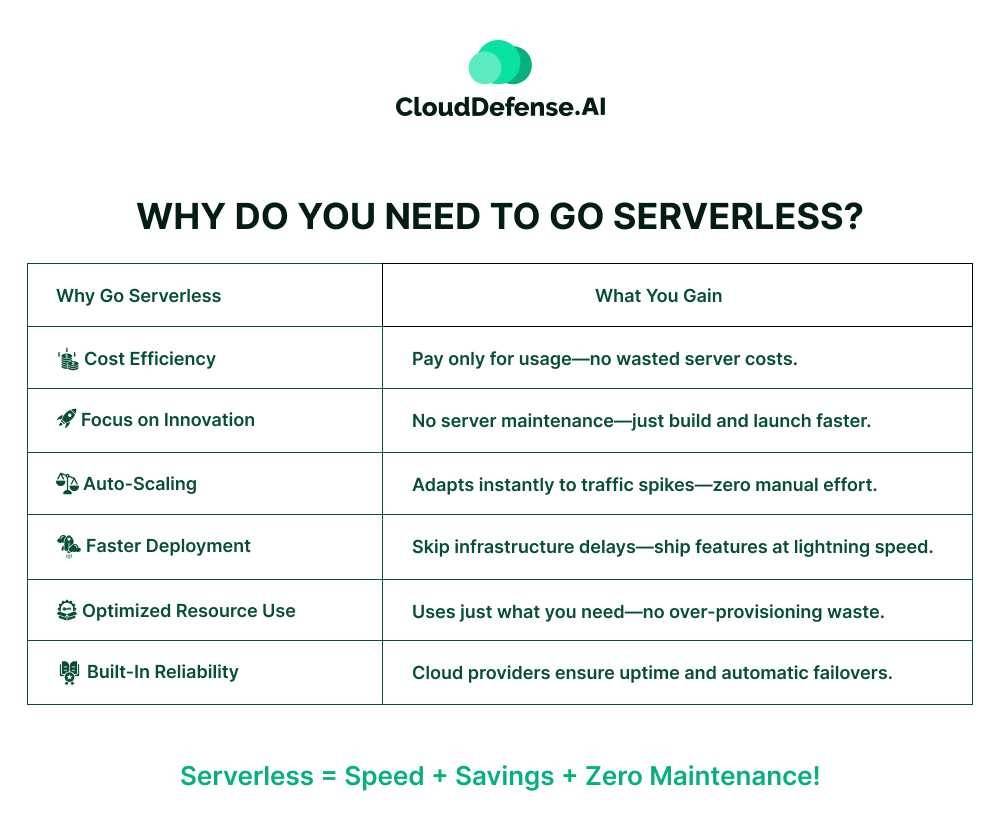

Why do you need to go Serverless?

Let’s get straight to the point: managing servers is a pain. If you’ve ever had to handle traditional infrastructure, you know how much time, money, and focus it drains. Serverless computing fixes these inefficiencies, and here’s why you should care.

Cost Efficiency

Think about this: with traditional servers, you’re paying to keep them running 24/7—even if your app is barely used during off-hours. That’s like keeping the lights on in a room no one’s in. With serverless, you only pay for what you use. This isn’t just a cost-saving feature; it’s a smarter way to allocate your budget.

Focus on Development, Not Infrastructure

Managing servers isn’t why you got into tech, is it? Applying security patches, ensuring uptime, dealing with scaling—it’s all busywork. Serverless lets you offload all of that to AWS, Azure, or Google Cloud. They take care of the infrastructure so you can focus on shipping features and solving real problems for your users.

Scaling Shouldn’t Be Your Problem

Traffic spikes can be unpredictable. One moment, your app is cruising along; the next, it’s flooded with users. If you’re running your own servers, scaling up and down is on you—and it’s a headache. Serverless handles scaling automatically. It doesn’t just save you stress; it ensures your app runs smoothly, no matter the demand.

Faster Time-to-Market

The quicker you can launch, the better. Traditional infrastructure slows you down. Provisioning servers, configuring environments—it all takes time. Serverless eliminates these steps, letting you focus on building and releasing. If speed matters to your business (and it should), serverless is the way forward.

Better Resource Allocation

Serverless forces you to think in terms of efficiency. You’re not over-provisioning; you’re using exactly what you need and nothing more. This isn’t just cost-effective—it’s responsible. It aligns your usage with your actual needs, making your operations leaner and more efficient.

Built-In Reliability

Downtime is expensive, and server maintenance is a distraction. Serverless platforms build redundancy and fault tolerance into their systems. That means fewer sleepless nights worrying about outages and more time focusing on your product.

In short, serverless computing isn’t just a technical shift—it’s a strategic one. It allows you to reallocate your energy, your team’s focus, and your budget toward what really matters: delivering value to your users. If you’re not thinking about serverless, you’re likely wasting resources and time. And that’s not a good position to be in.

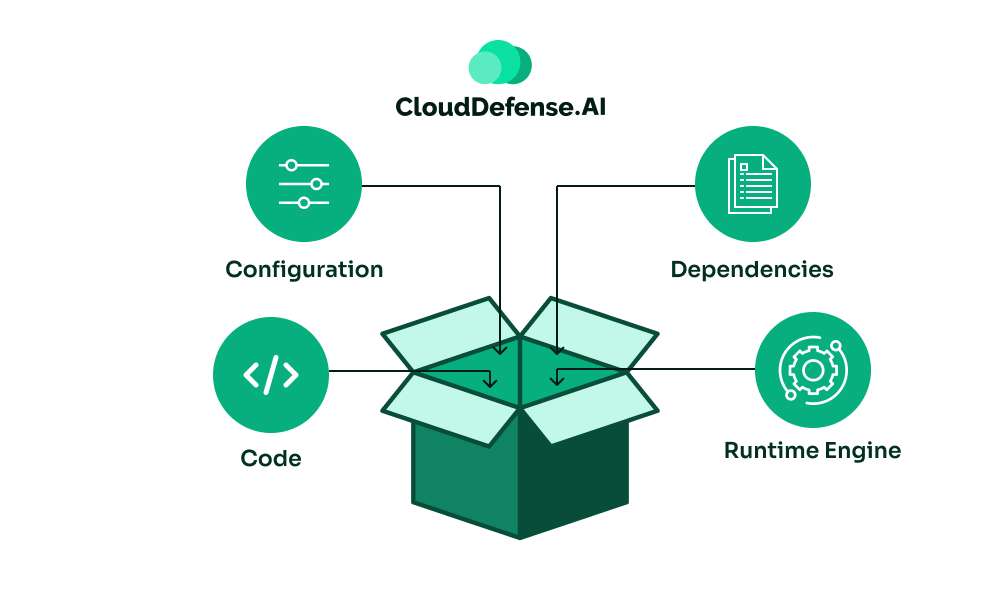

What are Containers?

Containers are lightweight, portable units that package an application along with its dependencies, ensuring it runs consistently across different environments.

For instance, if you’ve ever deployed an application, you’ve probably dealt with one frustrating issue: it works perfectly in one environment but crashes in another. That’s where containers come in. They fix this. And they do it in a way that makes your development process faster, more efficient, and way less frustrating.

Here’s how it helps:

So, when you move an application—say, from your laptop to a test server or production—things break. Dependencies, configurations, library versions—there’s a lot that can go wrong. Containers solve this by bundling everything the application needs into one portable, isolated package. Wherever that container goes, it works.

Now think about what this means for you:

- No more compatibility issues: Your app runs the same way everywhere. Period.

- Faster development cycles: Teams can work independently on different parts of the app without stepping on each other’s toes.

- Scalability without drama: Containers let you scale individual components, not the whole system.

What’s Inside a Container?

When you package an application into a container, here’s what you’re actually bundling:

- The application code itself.

- All dependencies (frameworks, libraries, etc.).

- Runtime engine, system tools and binaries it needs to function.

- Key configuration files to ensure smooth operation.

This means the container is completely self-sufficient. You’re not relying on the underlying infrastructure to provide anything.

Why Do We Need Containers?

If you’re operating in an Agile or DevOps environment, containers are non-negotiable. They enable microservices, which let you break your application into smaller, focused components. That means faster deployments, easier updates, and a better way to handle scaling.

Without containers, scaling becomes an expensive guessing game. With containers, you can pinpoint exactly what needs more resources and adjust in real time.

The main thing is that running one or two containers is simple. But what about running dozens or hundreds? That’s where orchestration tools like Kubernetes or Docker Swarm come in. These tools:

- Distribute containers across machines.

- Monitor and restart containers automatically if something goes wrong.

- Scale your application components up or down based on demand.

This way, containers solve real problems you’re probably already dealing with. They let you move faster, reduce errors, and build systems that can grow with you. If you’re not using them yet, ask yourself: how much longer can you afford to ignore a tool that fundamentally improves the way software is built and deployed?

Serverless vs. Containers: Key Differences

When deciding between serverless and containers, it’s not about which is better. It’s about which fits your needs. Both are pioneering, but they solve different problems and come with unique strengths. Let’s break it down so you can make the right choice.

Deployment and Management

- Serverless: You don’t worry about infrastructure. Your cloud provider (like AWS Lambda or Azure Functions) handles everything—scaling, patching, and uptime. You focus purely on the code.

- Containers: Containers give you control. You package your app and its dependencies, and while tools like Kubernetes can handle scaling and orchestration, you still manage more of the infrastructure compared to serverless.

So, if you’re someone who wants simplicity and doesn’t want to deal with servers, serverless wins here. But if you prefer to fine-tune and customize your infrastructure, containers are the better choice.

Scaling

- Serverless: Scaling is automatic and instant. When demand spikes, serverless platforms adjust resources dynamically. You only pay for what’s used, which eliminates idle costs. However, cold starts—when an instance takes time to spin up after inactivity—can occasionally slow things down.

- Containers: Containers also scale, but it requires configuration and orchestration through tools like Kubernetes. The process isn’t as seamless as serverless, but it gives you more control over how and when scaling happens.

So, ask yourself: Do you want scaling handled automatically, or do you need the ability to customize how it works?

Costs

- Serverless: You’re billed strictly for usage. If your app isn’t running, you’re not paying. This is ideal for applications with variable or unpredictable workloads since it avoids wasted resources.

- Containers: With containers, you pay for the resources you’ve allocated, whether your app is fully utilizing them or not. This works well for stable workloads where resource usage is predictable.

If your app has sporadic traffic, serverless can keep costs low. For consistent, long-running workloads, containers are often more cost-effective.

Startup Time

- Serverless: Serverless is optimized for speed, but cold starts can introduce slight delays when an instance is activated after being idle.

- Containers: Containers typically take longer to initialize but don’t experience cold starts once running. This makes them more predictable for applications where every millisecond counts.

Think about your use case—does a slight delay matter, or do you need constant readiness?

Flexibility

- Serverless: You trade flexibility for simplicity. You’re limited to the environments, runtimes, and languages your cloud provider supports. For most modern use cases, that’s fine. But if your application requires something custom or niche, this can be a limitation.

- Containers: With containers, you’re in charge of everything—runtime, OS, and environment. This makes them perfect for specialized setups or legacy systems that don’t fit into the constraints of serverless.

If your app is straightforward, serverless keeps things simple. If you need more control or customization, containers are the way to go.

Use Cases

- Serverless: Best for short-lived, event-driven applications—like APIs, scheduled tasks, or backend services with unpredictable workloads.

- Containers: Ideal for long-running applications, microservices architectures, and scenarios where you need full control over the environment.

Serverless and containers aren’t competing technologies—they’re complementary. If your priority is agility and minimal infrastructure management, go serverless. If you need control, flexibility, or are working with complex environments, containers are the way to go.

At the end of the day, the choice depends on what your application needs and how much control you want over the infrastructure.

Here’s a table summarizing the differences between Serverless vs Containers to make it easy for you to understand:

| Aspect | Serverless | Containers |

| Deployment and Management | Fully managed by cloud providers. Focus on writing code, while infrastructure like scaling, patching, and uptime is handled. | Requires packaging the application with dependencies. You manage the infrastructure using tools like Kubernetes or Docker Swarm. |

| Scaling | Automatic, dynamic scaling. Resources adjust instantly based on workload. | Requires manual configuration and orchestration to scale up or down. Scaling can be customized to fit specific needs. |

| Cost Efficiency | Pay-as-you-go model. Charges only for resources used, with no idle costs. | Charges based on allocated resources, whether fully utilized or not. Suitable for predictable, stable workloads. |

| Startup Time | Near-instant startup but can experience delays due to cold starts after inactivity. | Longer initialization times compared to serverless but no cold starts once containers are running. |

| Flexibility | Limited to the runtime, environment, and languages supported by the cloud provider. | High flexibility. Full control over runtime, OS, and environment. Suitable for custom or legacy setups. |

| Use Cases | Best for event-driven applications, APIs, lightweight tasks, or workloads with unpredictable demand. | Ideal for long-running applications, microservices, and environments where consistency between dev, test, and production is critical. |

| Infrastructure Responsibility | No responsibility for infrastructure. The provider manages everything. | You are responsible for maintaining the infrastructure, including updates, uptime, and security. |

| Workload Suitability | Perfect for sporadic workloads or applications where usage fluctuates. | Suitable for consistent, long-running workloads where resource needs are predictable. |

Choosing Between Serverless Vs Containers: Which Fits Your Scenario?

When deciding between serverless and containers, it’s all about aligning the technology with the specific needs of your project. Let’s break it down with scenarios to help you make the right choice.

Scenario 1: Speed and Simplicity are Non-Negotiable

If you’re in a situation where time is critical—say you’re a startup launching an MVP—serverless is the way to go. You can skip all the overhead of managing infrastructure. Just write your code, deploy, and let the cloud provider handle scaling, uptime, and maintenance. For lightweight, event-driven apps or anything where usage can spike unpredictably, this is the most efficient path.

Scenario 2: You Need Control Over Everything

Containers are your solution if you’re managing a complex system with multiple services or dependencies. For instance, if you’re running a large-scale application with microservices, containers let you define the environment precisely, ensuring everything works as expected. Tools like Kubernetes give you the power to manage and scale these workloads. You’ll need to manage infrastructure, but the flexibility you get in return is unmatched.

Scenario 3: Your Traffic is All Over the Place

Imagine you’re running a platform like a ticketing system for major events. Traffic could be flat for weeks and then explode in minutes. Serverless is perfect here. You only pay for the actual resources your app uses, and it scales automatically to meet the demand. Containers can handle this too, but you’d need to configure and manage scaling yourself, which might mean extra effort and cost.

Scenario 4: You’re Migrating Legacy Systems

If you’re modernizing a legacy application with a lot of custom configurations, containers give you the flexibility you need. You can replicate the existing environment inside containers and transition gradually without worrying about compatibility issues. Serverless, in contrast, might require rewriting significant portions of your code to fit the supported runtimes and frameworks.

Scenario 5: Cost is a Key Factor

For unpredictable workloads, serverless is typically more cost-effective because you only pay for what you use. Containers can be economical too, but only if your resource usage is consistent and predictable. Otherwise, you risk paying for idle capacity, which can add up quickly.

So, Which One Should You Pick?

Here’s the deal:

- Go Serverless if you want speed, simplicity, and scalability without managing infrastructure. It’s great for apps with sporadic demand or short-lived tasks.

- Choose Containers if you need full control, are working with complex systems, or are migrating legacy workloads.

Take a step back, think about your project’s needs, and decide what makes the most sense. The right choice depends entirely on your priorities—don’t overcomplicate it.

Importance of Serverless and Containers Security

No matter how innovative or efficient your architecture is—serverless or containers—it’s only as good as its security. Both models come with unique challenges, and at CloudDefense.AI, we specialize in ensuring your cloud infrastructure stays secure, no matter what you’re running. Let’s talk specifics.

Serverless Security

Serverless simplifies development and deployment, but don’t mistake simplicity for security. Here are key areas that need attention:

- Function-Level Vulnerabilities: Each serverless function is a potential entry point. It’s critical to scan and secure every function’s code for vulnerabilities.

- Misconfigured Permissions: Over-permissioned roles can lead to privilege escalation. Use a principle of least privilege to restrict access to only what’s necessary.

- Event Triggers: Functions are triggered by events like HTTP requests, message queues, or cloud storage updates. Malicious inputs or unchecked data in these triggers can exploit your system.

At CloudDefense.AI, we help you monitor and secure these moving parts, offering real-time insights into vulnerabilities and ensuring compliance with security best practices for serverless architectures.

Securing Containerized Workloads

Containers offer control and portability, but their shared resources and layered architecture come with risks:

- Misconfigurations: Incorrect network policies, excessive privileges, or weak secrets management.

- Runtime Threats: Attackers exploiting vulnerabilities within running containers.

- Supply Chain Risks: Compromised base images or dependencies that make their way into production.

How CloudDefense.AI Helps:

- KSPM for Kubernetes: Our Kubernetes Security Posture Management (KSPM) tool identifies and remediates misconfigurations, ensuring clusters are secure and compliant.

- Container Scanning: Proactively scan images for vulnerabilities and compliance issues before deployment.

- Runtime Threat Detection: Monitor container activities, flag anomalies, and block threats in real-time.

Why You Need an Integrated Security Platform

With the growing complexity of cloud-native architectures, siloed security tools aren’t enough. A comprehensive solution like CloudDefense.AI CNAPP provides:

- Unified visibility into both serverless and containerized workloads.

- Centralized management of vulnerabilities, permissions, and runtime threats.

- Automated remediation to minimize human effort and speed up response times.

Security shouldn’t slow you down whether you’re running serverless workloads or containers. With CloudDefense.AI, you can innovate confidently while knowing your applications are protected at every stage—build, deploy, and run. Let’s secure your cloud-native journey together.