How can you ensure your software meets the highest standards of quality through efficient quality assurance (QA) testing? Today, user expectations and security concerns are at an all-time high, and adopting the right software testing best practices is essential.

These practices not only catch errors early but also save time, reduce costs, and deliver exceptional user experiences. This blog will cover proven techniques, offering actionable insights to help you simplify your QA process and achieve reliable, high-performing applications.

By the end, you’ll have a clear roadmap to elevate your software quality and meet the demands of the competitive software development industry.

Best Practices for Software Testing Projects

Poor quality assurance often leads to product failures, driving away customers and exposing applications to security risks. To prevent this, companies implement Software Quality Assurance (SQA), a key part of quality management.

SQA focuses on creating a culture of continuous improvement by setting clear, measurable quality standards and ensuring reliable and high-performing software.

Understand Requirements and Define Clear Objectives

A successful software testing process starts with a good understanding of the project’s requirements and the establishment of clear, well-defined testing objectives.

This involves close collaboration with stakeholders, including business analysts, product owners, and development teams, to ensure that all functional and non-functional requirements are thoroughly documented and understood.

Using the SMART criteria—Specific, Measurable, Achievable, Relevant, and Time-bound—to define testing objectives is key to creating a structured and efficient testing framework.

- Specific: Objectives should pinpoint exact areas of focus, such as validating login functionality, ensuring API performance under high traffic, or confirming adherence to security standards like OWASP Top 10. Ambiguity in goals often leads to oversight, so clarity is essential.

- Measurable: Define quantifiable benchmarks to track progress. For instance, the aim is to achieve 95% test case coverage, reduce the defect leakage rate to below 5%, or ensure the application loads within 2 seconds for 90% of users. Measurable outcomes provide teams with clear indicators of success.

- Achievable: Objectives must align with the team’s capabilities and available resources. For example, if the testing team has limited automation expertise, automating 70% of regression tests in the first sprint may be unrealistic. Instead, set achievable goals, such as automating 30% in the first sprint, with a plan to increase coverage incrementally.

- Relevant: Testing goals should directly align with the project’s broader objectives. For instance, if the project’s priority is a secure payment gateway, allocating resources to rigorous penetration testing and compliance verification (e.g., PCI DSS) is more relevant than exhaustive exploratory testing on unrelated features.

- Time-bound: Establish deadlines to ensure progress remains on schedule. For example, define that functional testing for the first module should be completed within two weeks, and performance testing for the application should conclude by the end of the second sprint.

By employing the SMART criteria, teams can ensure their testing objectives are precise, actionable, and aligned with project priorities. This approach eliminates redundancy, optimizes resource allocation, and focuses efforts on delivering high-quality software within defined timelines.

Create Detailed Test Plans and Strategies

A well-structured test plan serves as a detailed roadmap for the entire testing process, ensuring that every aspect of the project is systematically covered. This document should provide a clear framework, including:

- Scope of Testing: Define what will and won’t be tested. This helps teams focus on key areas, from functional testing to performance, security, and usability checks.

- Testing Objectives: Specify the goals of testing, such as ensuring system stability, identifying security vulnerabilities, or validating user experience.

- Timelines and Milestones: Establish a realistic testing schedule that aligns with the project’s overall timeline. Include key milestones, such as test execution, defect resolution, and final validation phases.

- Resource Allocation: Detail the human and technical resources required. This includes assigning roles to team members (testers, developers, QA analysts), estimating workloads, and identifying hardware or software tools needed for testing.

- Test Environment: Specify the setup for the test environment, including configurations, data requirements, and any dependencies on third-party systems.

- Tools and Technologies: Identify the testing tools (e.g., automated testing frameworks, bug-tracking systems, and performance monitoring software) that will be used to simplify the process.

- Deliverables: Define the expected outputs at each stage, such as test cases, bug reports, and test summary reports.

Design Detailed and Effective Test Cases

Test cases are the foundation of any thorough software testing process, serving as precise, step-by-step instructions for validating the functionality, performance, and reliability of individual software components. Well-designed test cases enable testers to systematically verify that the software behaves as expected under various conditions, minimizing the likelihood of overlooked issues.

Key characteristics of effective test cases include:

- Clarity and Detail: Each test case should be written clearly and concisely, leaving no ambiguity for the tester. Include detailed steps for execution, input data, preconditions, and postconditions.

- Comprehensive Coverage: Incorporate both positive and negative test scenarios to validate not only that the software performs as intended but also how it handles invalid or unexpected inputs.

- Reusability: Design test cases to be modular and reusable across different testing cycles and similar projects, enhancing efficiency and consistency in testing efforts.

- Traceability: Map test cases directly to requirements, user stories, or business rules to ensure complete coverage and enable easier tracking of progress.

- Expected Results: Define the expected outcome for each step, including system outputs, data changes, or UI updates. This makes it easier to identify deviations and diagnose issues.

Test cases should be regularly reviewed and updated as the software evolves. Changes in requirements, new features, or resolved defects may necessitate modifications to existing cases or the creation of new ones. This repetitive approach ensures that test cases remain relevant, effective, and aligned with the software’s current state.

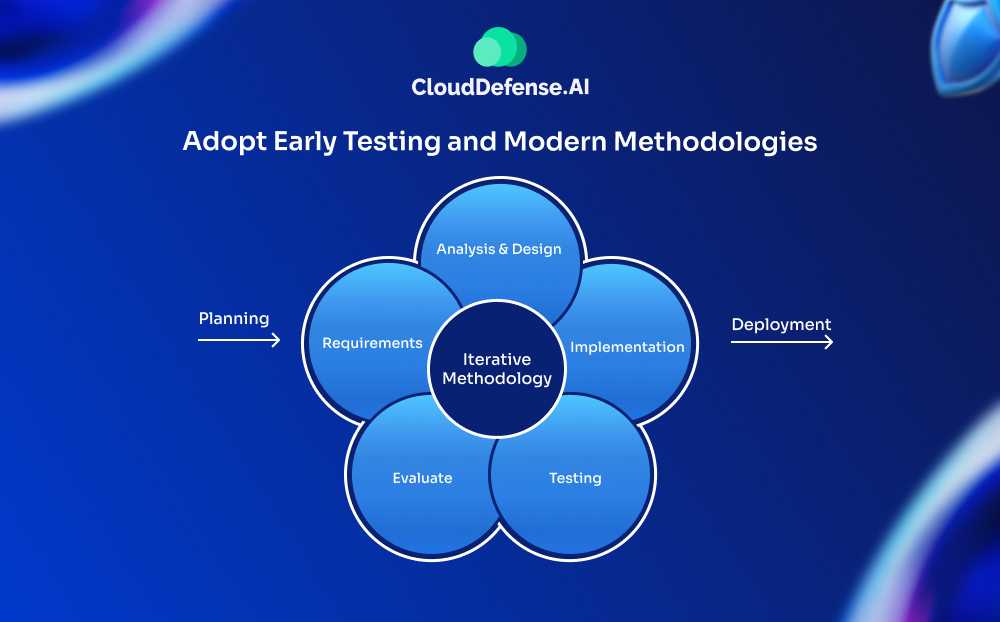

Adopt Early Testing and Modern Methodologies

Incorporating testing early in the development lifecycle—commonly known as shifting left—is a critical best practice that enables teams to identify and address defects at their earliest stages. Detecting issues early not only reduces the cost and effort required to fix them but also minimizes the risk of cascading failures that could disrupt later stages of development.

Key strategies for early testing include:

- Incorporate Testing in Initial Phases: Begin with unit and integration tests during the development phase. This allows teams to catch defects at the module level before they propagate into the larger system.

- Continuous Feedback Loops: Promote close collaboration between developers, testers, and other stakeholders to ensure rapid feedback and quick resolution of issues.

To complement early testing, adopting modern development methodologies such as Agile and DevOps can further enhance software quality:

- Agile: Emphasizes iterative development and continuous feedback, integrating testing into each sprint to ensure incremental improvements. Agile testing focuses on adaptability, rapid response to changes, and delivering a high-quality product that aligns with evolving customer needs.

- DevOps: Extends Agile principles by promoting a culture of collaboration between development and operations teams. DevOps practices, such as Continuous Integration (CI) and Continuous Deployment (CD), embed testing into the automated pipeline, enabling smooth and frequent validation of code changes.

These methodologies emphasize continuous testing, where automated tests are executed at every stage of the development pipeline. This ensures rapid detection and resolution of defects, accelerating time-to-market while maintaining high software quality.

By adopting early testing and modern methodologies, organizations can simplify development processes, reduce costs, and deliver reliable, high-performing software with confidence.

Combine Different Testing Types for Comprehensive Coverage

No single testing type can ensure complete software quality. Combine functional, regression, performance, security, and exploratory testing to cover all aspects of the application. Each testing type focuses on specific risks, ensuring well-rounded software reliability. By using a mix of automated and manual testing, teams can address repetitive tasks efficiently while uncovering nuanced bugs that require human intervention.

Report Bugs Clearly and Effectively

Effective bug reporting ensures developers can quickly replicate and address issues. Provide detailed information, including steps to reproduce the bug, expected versus actual results, screenshots, and logs if applicable. A well-documented bug report saves time by eliminating back-and-forth communication between testers and developers, accelerating the resolution process and keeping the project on schedule.

Involve Non-Testers in the Testing Process

Incorporate feedback from non-testers, such as business analysts, designers, and even end-users, to identify overlooked scenarios. Diverse perspectives can help uncover usability issues, edge cases, and real-world challenges that traditional testing might miss. Encouraging cross-functional collaboration enriches the testing process and strengthens overall software quality.

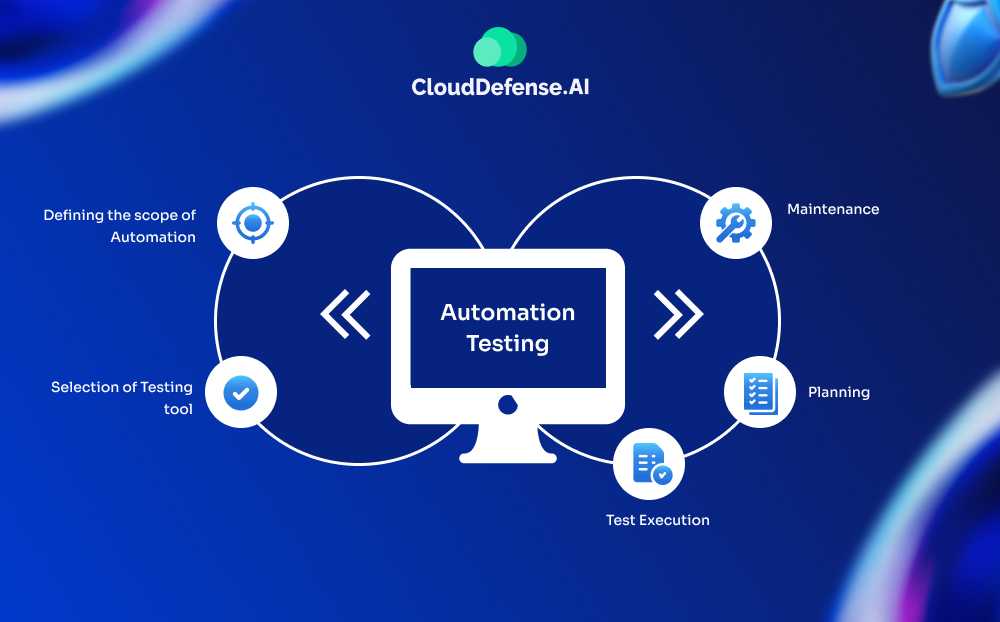

Use Automation Testing Judiciously

Automation testing is a powerful tool but must be used strategically. Automate repetitive and time-consuming tests, such as regression or load testing, to improve efficiency and consistency. Avoid automating tests with frequently changing requirements or those requiring human intuition. Strike a balance between manual and automated testing to maximize coverage and minimize effort.

Implement Continuous Testing in DevOps

Continuous testing ensures that software is validated at every stage of the development lifecycle. Integrated into CI/CD pipelines, it provides real-time feedback, allowing teams to address issues promptly. Continuous testing reduces bottlenecks and accelerates delivery while maintaining software reliability. Use tools that support automated builds, test executions, and reporting for smooth integration.

Monitor Testing Metrics and KPIs Regularly

Track key metrics, such as defect density, test case execution rate, and mean time to resolution, to evaluate testing efficiency. Metrics provide data-driven insights into the testing process, highlighting areas for improvement. Use them to assess team performance, predict timelines, and communicate progress with stakeholders. Regular monitoring ensures the testing process remains aligned with project goals.

Promote a Collaborative Work Environment

Create an environment where testers, developers, and stakeholders collaborate smoothly. Encourage open communication and knowledge-sharing sessions to resolve challenges effectively. A culture of collaboration improves team morale and ensures that testing efforts are well-coordinated, leading to higher-quality software outcomes.

Separate Testing Environments from Development

Always conduct testing in a dedicated environment that mirrors the production setup. This isolation prevents developer bias and ensures that real-world conditions are simulated accurately. Separate environments also allow for rigorous testing of updates or new features without impacting live systems.

Conduct Negative Testing

Negative testing, or error path testing, ensures the software can handle invalid inputs, unexpected user behaviors, and system failures gracefully. Test edge cases, incorrect data formats, and unauthorized actions to verify the strength of the application. This approach strengthens software reliability and prevents potential vulnerabilities from being exploited.

Balance In-House and Outsourced Testing Efforts

Combine in-house expertise with outsourced testing services to maximize efficiency. In-house teams provide domain knowledge and align closely with project goals, while external testers offer fresh perspectives and specialized skills. This balance ensures thorough coverage and reduces blind spots in the testing process.

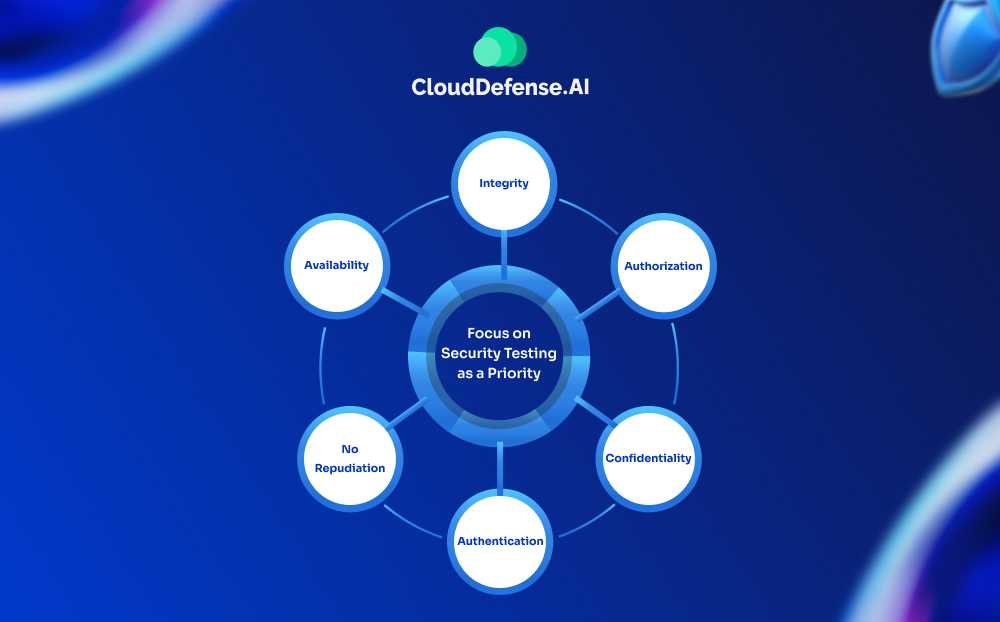

Focus on Security Testing as a Priority

With increasing cyber threats, security testing is non-negotiable. Validate the software’s resilience against vulnerabilities, such as SQL injection and cross-site scripting. Regular penetration testing and adherence to security standards protect sensitive data and uphold user trust. Make security a core component of the testing lifecycle.

Conclusion

High-quality products are not the result of random luck. Such products evolve from a concerted effort to create a culture of excellence, usually by establishing high-level quality assurance teams to oversee the software testing and development cycles. Companies that are serious about competing on quality imbibe all, if not a combination, of some of the best software testing practices listed in this article.